Eons uses a versioning system compatible with Semantic Versioning. However, we change some of the semantics to simplify usage for real-world products.

We still use [MAJOR].[MINOR].[PATCH] versions but they are more accurately named:

- Requirements

- Implementation

- Updates

Thus, we say [REQUIREMENT].[IMPLEMENTATION].[UPDATE].

A simple test to know which version number to increment is:

- Requirements – did the requirements of the project change?

- Implementation – did the tests change?

- Updates – did the design, code, or dependencies change?

Always take the highest: if the requirements and the tests changed, increment the requirements.

When a version changes, reset the counters on all versions beneath / to the right of it. For example, if the implementation version changes, set the update version to 0 and leave the requirement version unchanged.

Never decrement a version number. You can change things back to the way they were by incrementing the appropriate version number. Entropy must always increase.

Requirements

Requirements represent the purpose or “environmental niche” that the versioned product is designed for. For example, the requirement (major) version of a hammer has not been changed in a long time.

Stating requirements only implies a usage and does not guarantee the product will be used for the intended purpose. For example, you could put a hammer on the edge of a table and use it to fling nails around – no one is stopping you – but that is a use case outside of the requirements that drive hammer production.

It is up to managers to set requirements. Perfect management (and foresight) results in unchanging and everlasting requirements. Changing the requirements can be seen as a pivot or as developing a separate product from the old.

Implementation

Our backwards compatibility is only as rigid as what we test for. If functionality exists but is not tested for, we cannot reasonably guarantee that the functionality will be maintained. This is the main difference between the Eons versioning system and that laid out by Semver. When we talk of “backwards compatibility”, we speak of what requirements we attempt to satisfy through tests while knowing that the tests themselves are imperfect.

Implementation thus represents how well the requirements are being met and can indicate how dependable the product might be moving forward. A stable and mature project should be able to point to test cases to show that backwards compatibility has been maintained. Similarly, if you choose to build on product that does not test for your use case then you are using the product in an unsupported manner.

Under Eons versioning, implementation (minor) releases can “break” backwards compatibility. This happens when that compatibility was not defined or when tests themselves are identified as the source of a problem, and are inhibiting the project from meeting the requirements.

Both managers and engineers should collaborate on implementation to ensure that all requirements are being adequately tested for. It may also be advantageous to leave features officially untested while they are being developed and add official tests once agreement is reached on how the requirement should be implemented.

Updates

Products need to be built and code needs to be written. Updates are how you do it. Before releasing your product, make sure your tests pass. That’s it!

You should also increment the update (patch) version when updating dependencies; i.e. with any external code changes.

Short Versions

Part of the inspiration for Eons versioning is to be able to utilize floating point numbers to represent versions. By ensuring updates always meet the established requirements, there is effectively no difference between versions 1.2.3 and 1.2.4. Thus, both can be stated as 1.2. the precision on the float can be adjusted to represent orders of magnitude (e.g. 1.20 > 1.19 > 1.2).

Project Quality

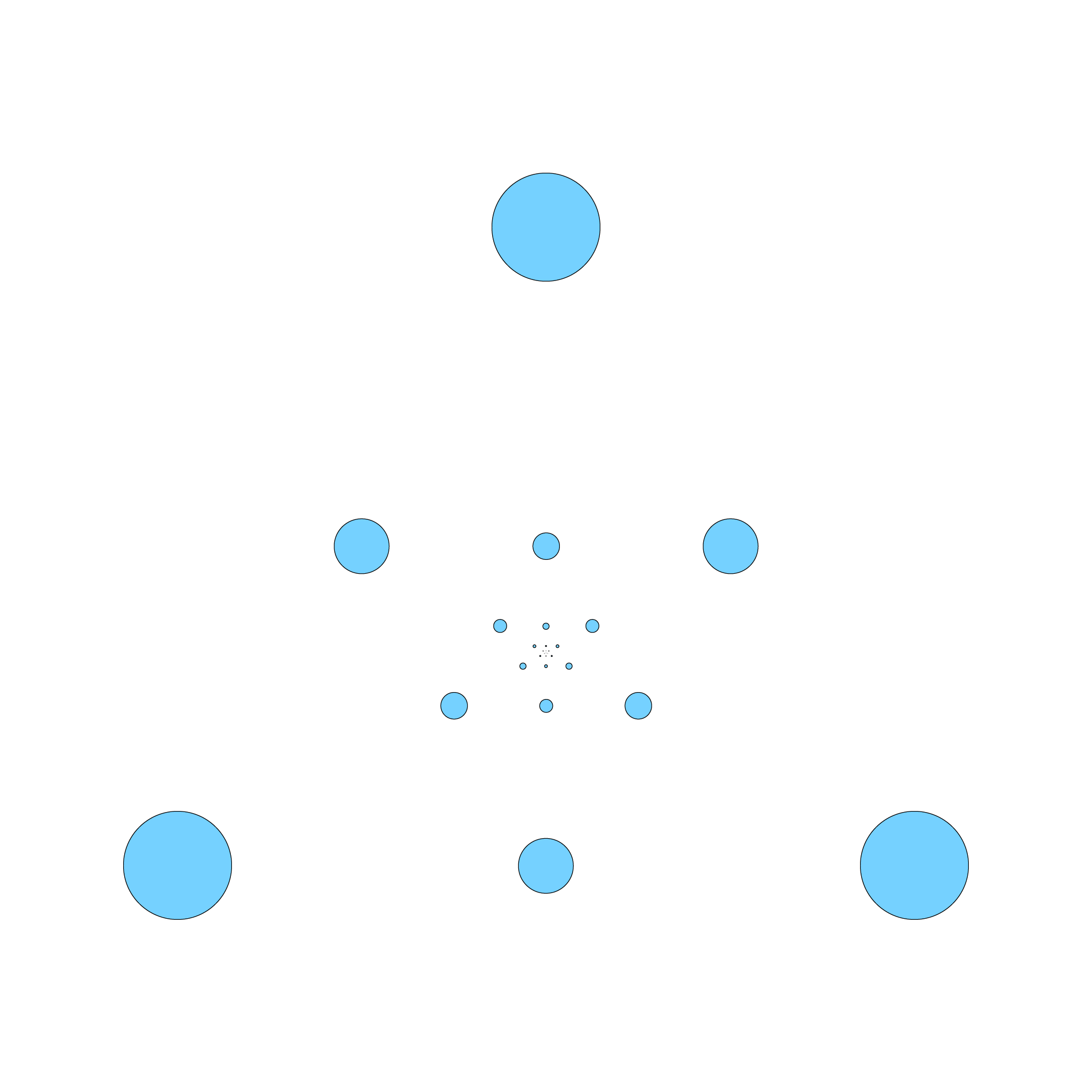

We can go a step further and say what a version means for project quality.

We assume good projects are:

- Reliable (i.e. backwards compatible).

- Active (i.e. lots of updates).

- Well managed (i.e. kept on task).

From the version, we can already tell if a project is active (from the update number) and well managed (from the major version number). Using these values alone, we can state that project_quality_approximation = version_update / version_requirement

Determining reliability requires an additional metric: test coverage. Once we know how many guarantees are offered, in the form of test coverage and project size, we can state reliability as how often the tests change (i.e. the implementation version number). Thus, reliability = project_size * test_coverage / version_implementation.

Combining all the metrics above, we get: project_quality = (version_update * project_size * test_coverage) / (version_requirements * version_implementation).

Quality vs Quantity

Increasing the project size means changing the tests to match which means increasing the implementation version. This leads us directly into the classic quality vs quantity tradeoff: increasing the size of the project often decreases the quality of the project. This is even more applicable to requirement versions. Increasing the scope of a project by changing its requirements will increase the size of the project at the cost of a requirement (major) version update.

A good project is as large as it needs to be but no larger.

Management: A Necessary Evil?

Using the equation above, direct managerial action always decreases a project’s quality. As stated earlier, perfect management means perfect foresight and results in requirements that never change. We know that this is infeasible in reality, and would like to reiterate that you and your team should never be afraid of changing the requirements or the tests; do what is necessary and don’t apologize for it!

The flip side to this is that management can indirectly improve a project by encouraging work on it thus increasing the frequency of update releases. Rather than being all-seeing wizards, good managers are often boots-on-the-ground supporters of their workers.

Applicability

We do not recommend judging the work of others based on this interpretation of project quality. Use this equation and its implications guide your own work and help you improve your projects.